Understanding Model Predictions: A Comparative Analysis of SHAP and LIME on Various ML Algorithms

DOI:

https://doi.org/10.59738/jstr.v5i1.23(17-26).eaqr5800Keywords:

Machine Learning, SHAP, LIMEAbstract

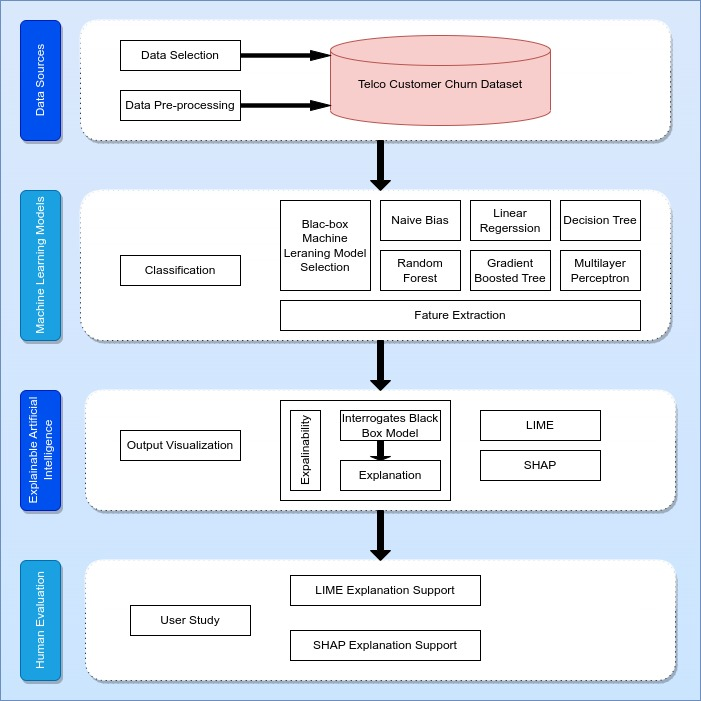

To guarantee the openness and dependability of prediction systems across multiple domains, machine learning model interpretation is essential. In this study, a variety of machine learning algorithms are subjected to a thorough comparative examination of two model-agnostic explainability methodologies, SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations). The study focuses on the performance of the algorithms on a dataset in order to offer subtle insights on the interpretability of models when faced with various algorithms. Intriguing new information on the relative performance of SHAP and LIME is provided by the findings. While both methods adequately explain model predictions, they behave differently when applied to other algorithms and datasets. The findings made in this paper add to the continuing discussion on model interpretability and provide useful advice for utilizing SHAP and LIME to increase transparency in machine learning applications.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Journal of Scientific and Technological Research

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.